Datera presented at Tech Field Day 18 and provided additional insights on their software-defined storage solution as well as perspectives on modern Data Centers. Datera’s Team:

– Al Woods, CTO

– Nic Bellinger, Chief Architect and Co-Founder

– Bill Borsari, Head of Field Systems Engineering

– Shailesh Mittal, Sr. Engineering Architect

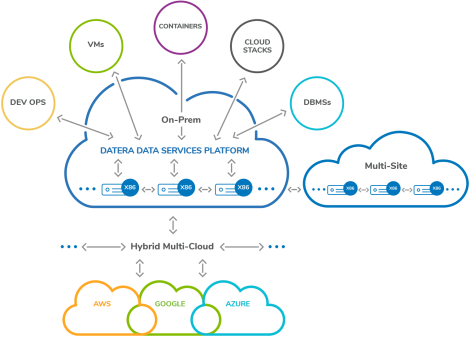

The presentation started with an introduction on enterprise software-defined storage, characteristics of a software-defined Data Center, overview of their data services platform pillars, business use cases, and current server partnerships that their software runs on.

Datera’s meaning of a software-defined Data Center includes these characteristics:

1 – All hardware is virtualized and delivered as a service

2 – Control of the Data Center is fully automated by software

3 – Supports legacy and cloud-native applications

4 – Radically lower cost versus hard wired Data Centers

With this mind, the building blocks of the Datera Data Server Platform architecture were also presented which is extremely relevant for those interested on what is “under the hood” and how it is built, and where standard functions such as dedup, tiering, compression, and snapshots happen. Datera focused on demonstrating how the architecture is optimized to overcome the traditional storage management and data movement challenges. This is where one needs to have some background on storage operation to fully understand the evolution to a platform built for always-on transparent data movement working on a distributed, lockless, application intent driven architecture running on x86 servers. The overall solution is driven by application service level objectives (SLOs) intended to provide a “self-driving” storage infrastructure.

There were no lab demos during the sessions, however, there were some unique slide animations on what Datera calls continuous availability and perpetual storage service to contextualize how their solution works. The last part of the presentation was about containers and microservices applications and how Datera provides enough flexibility and safeguards to address such workloads and portability nature.

Modern Data Center

In a whiteboard explanation, Datera also shared that they have seen more Clos style (leaf-spine) network architecture on modern Data Centers, and see themselves as “servers” that are running in a rack-scale design alongside independent compute nodes. The network is the backplane and integral part of the technology, as compute nodes access the server-based software storage over the network with high-performance iSCSI interfaces. It also supports S3 object storage access.

One of the things I learned during the presentation is their ability to peer directly with the top of rack (leaf) switches via BGP. The list of networking vendors is published here. Essentially, Datera integrates SLO-based L3 network function virtualization (NFV) with SLO-based data virtualization to automatically provide secure and scalable data connectivity as a service for every application. It accomplishes this by running a software router (BGP) on each of its nodes as a managed service, similar to Project Calico. Al Woods wrote about the benefits of L3 networking very eloquently on this article. I find it interesting how BGP is making its way inside Data Centers in some shape or form.

In addition to the L3 networking integration for more Data Center awareness, Datera adopts an API first design by making everything API-driven, a policy-based approach from day 1 which is meant for easy operations at scale, and targeted data placement to ensure data is distributed correctly across physical infrastructure to provide for availability and resilience. This is all aligned to the concept of a scale-out modern Data Center.

As a follow-up, Datera will also be presenting at Storage Field Day 18 and there may be more opportunity to delve into their technology and have a glimpse of the user interface and multi-tenancy capabilities.

You must be logged in to post a comment.